Databricks Unity Catalog External Tables

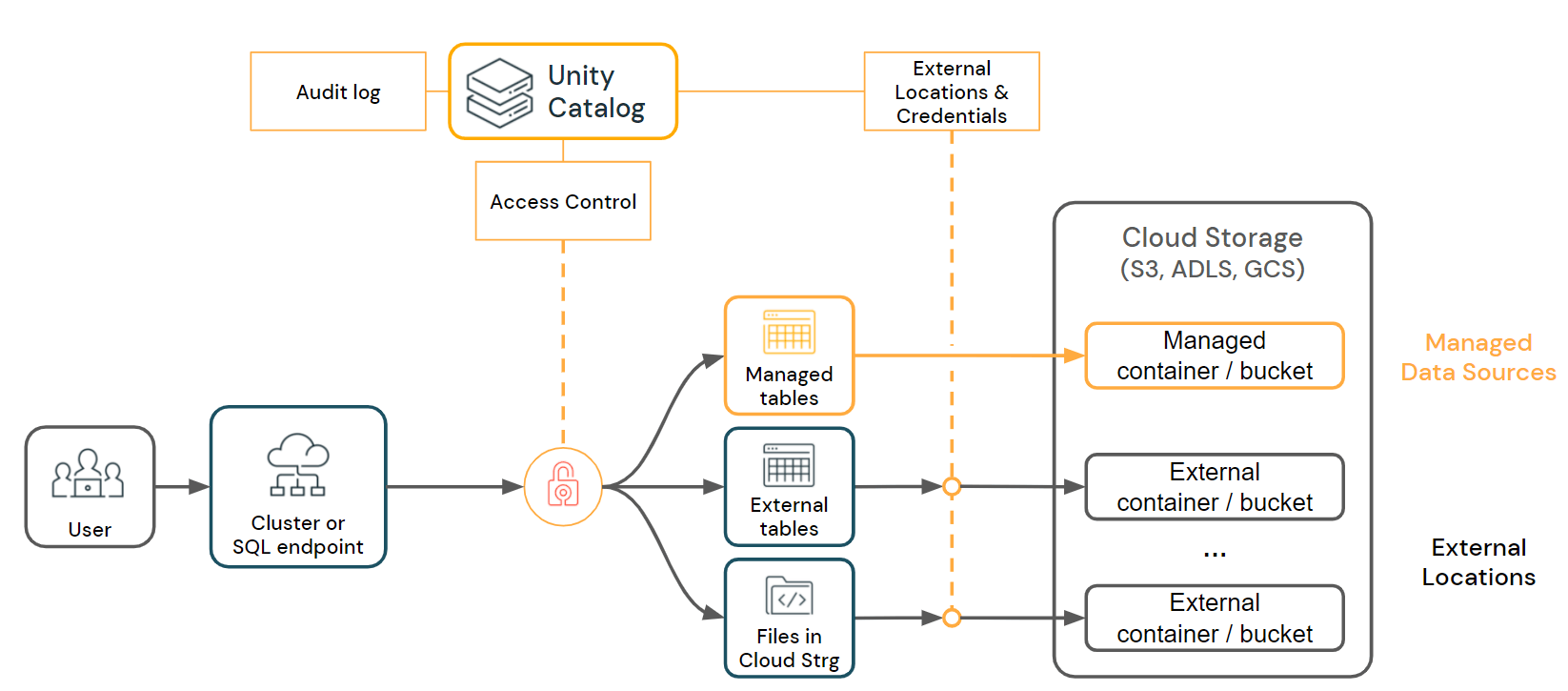

Databricks Unity Catalog External Tables - I am not sure if i am missing something, but i just created external table using external location and i can still access both data through the table and directly access files. To create a monitor, see create a monitor using the databricks ui. For managed tables, unity catalog fully manages the lifecycle and file layout. You will serve as a trusted advisor to external clients, enabling them to modernize their data ecosystems using databricks data cloud platform. External tables store all data files in directories in a. Connect to external data once you have external locations configured in unity catalog, you can create. An external location is an object. External tables in databricks are similar to external tables in sql server. Leading data and ai solutions for enterprises

databricks offers a unified platform for data, analytics and ai. Load and transform data using apache spark dataframes

to use the examples in this tutorial, your workspace must have unity catalog enabled. You will serve as a trusted advisor to external clients, enabling them to modernize their data ecosystems using databricks data cloud platform. A metastore admin must enable external data access for each. To create a monitor, see create a monitor using the databricks ui. Load and transform data using apache spark dataframes

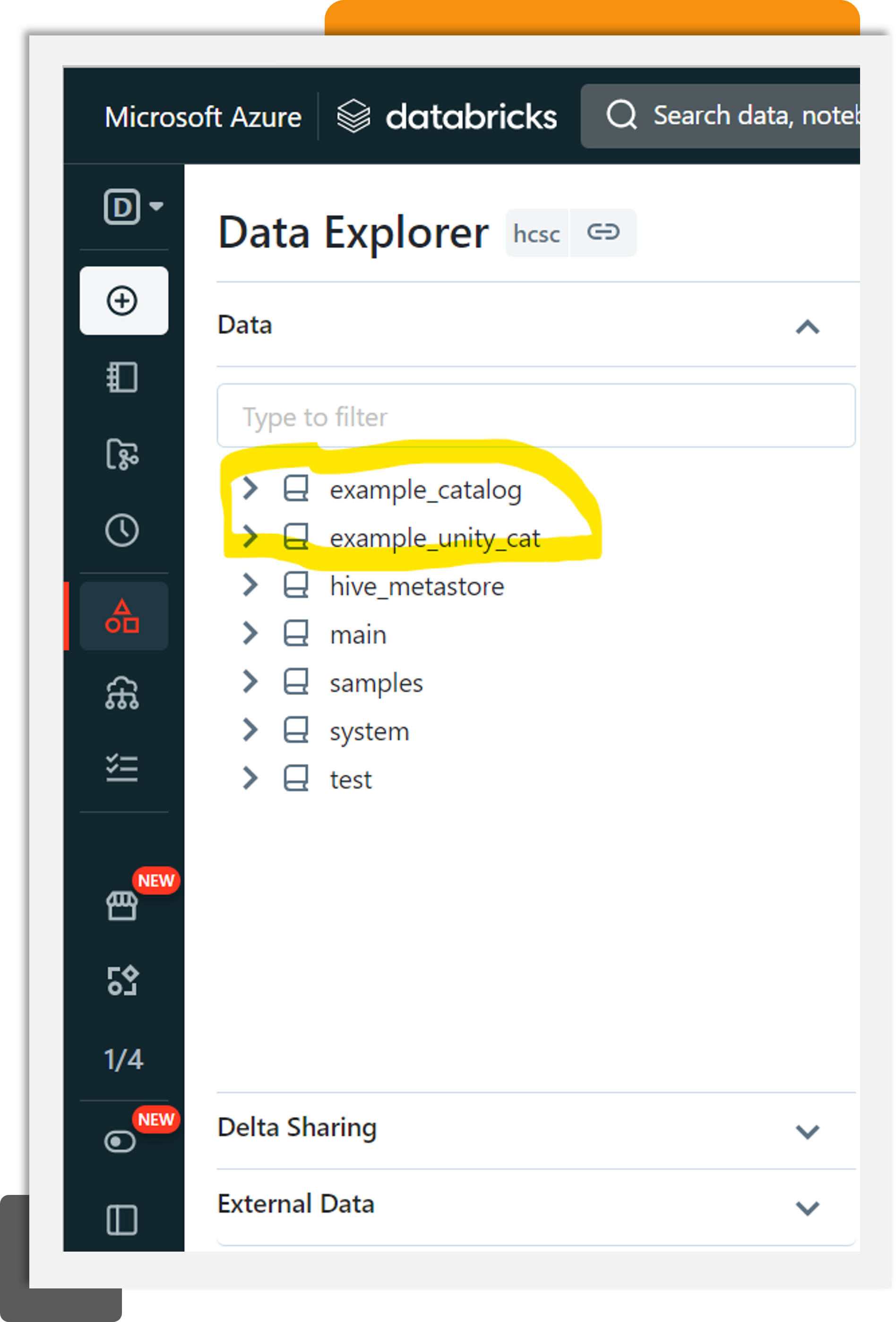

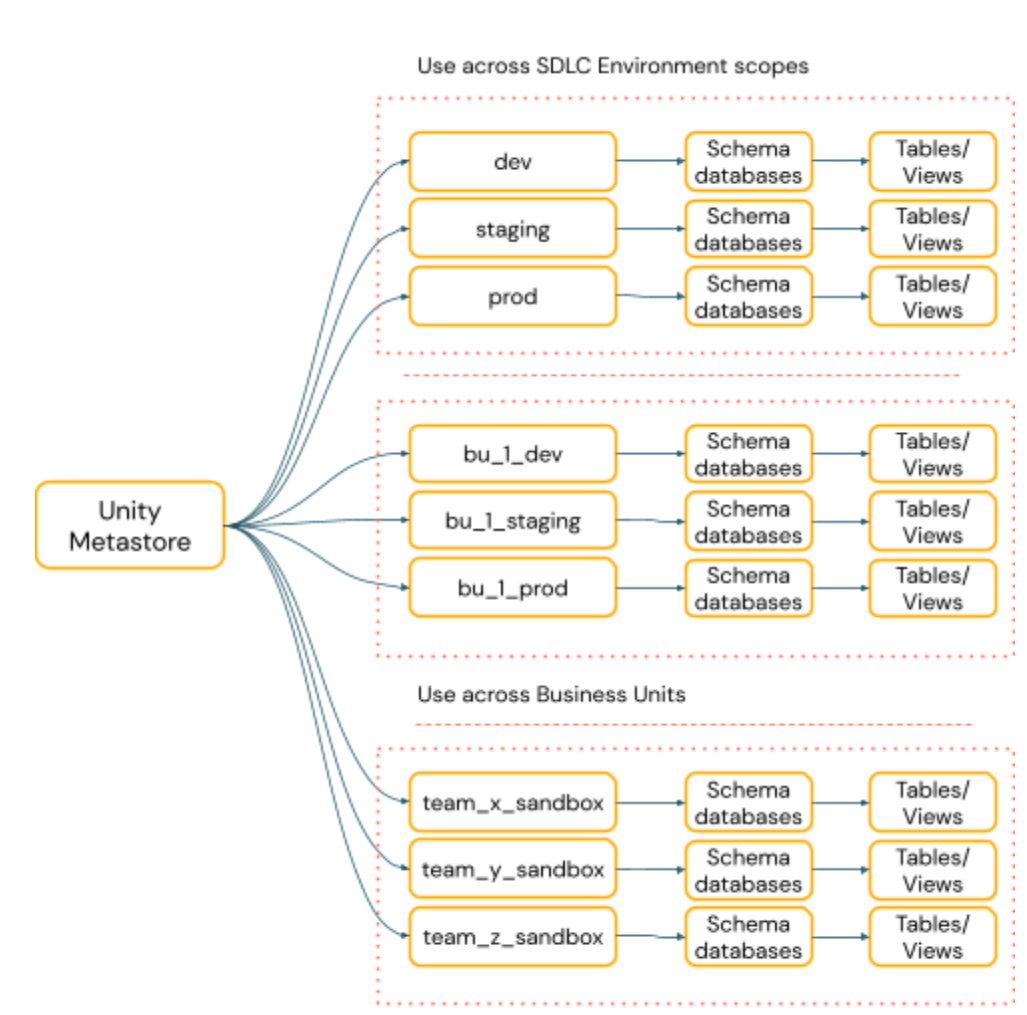

to use the examples in this tutorial, your workspace must have unity catalog enabled. Learn about unity catalog external tables in databricks sql and databricks runtime. Unity catalog (uc) is the foundation for all governance and management of data objects in databricks data intelligence platform. To.people also search for databricks external tablesdatabricks external table pathexternal table azure databricksdatabricks create foreign catalogazure unity catalog external tableunity catalog managed tablesunity catalog delete external tableshive databricks external tablesrelated searches for databricks unity catalog external tablesdatabricks external tablesdatabricks external table pathexternal table azure databricksdatabricks create foreign catalogazure unity catalog external tableunity catalog managed tablesunity catalog delete external tableshive databricks external tablessome results have been removedpagination1234next</ol></main>related searches for databricks unity catalog external tablesdatabricks external tablesdatabricks external table pathexternal table azure databricksdatabricks create foreign catalogazure unity catalog external tableunity catalog managed tablesunity catalog delete external tableshive databricks external tables© 2025 microsoft privacy and cookieslegaladvertiseabout our adshelpfeedbackyour privacy choicesconsumer health privacyallpast 24 hourspast weekpast monthpast year refresh Simplify etl, data warehousing, governance and ai on the data intelligence platform. I am not sure if i am missing something, but i just created external table using external location and i can still access both data through the table and directly access files. The examples in this tutorial use a unity catalog volume to store sample data. Since its launch several years ago unity catalog has. Databricks recommends configuring dlt pipelines with unity catalog. Sharing the unity catalog across azure databricks environments. An external location is an object. Unity catalog governs data access permissions for external data for all queries that go through unity catalog but does not manage data lifecycle, optimizations, storage. Load and transform data using apache spark dataframes

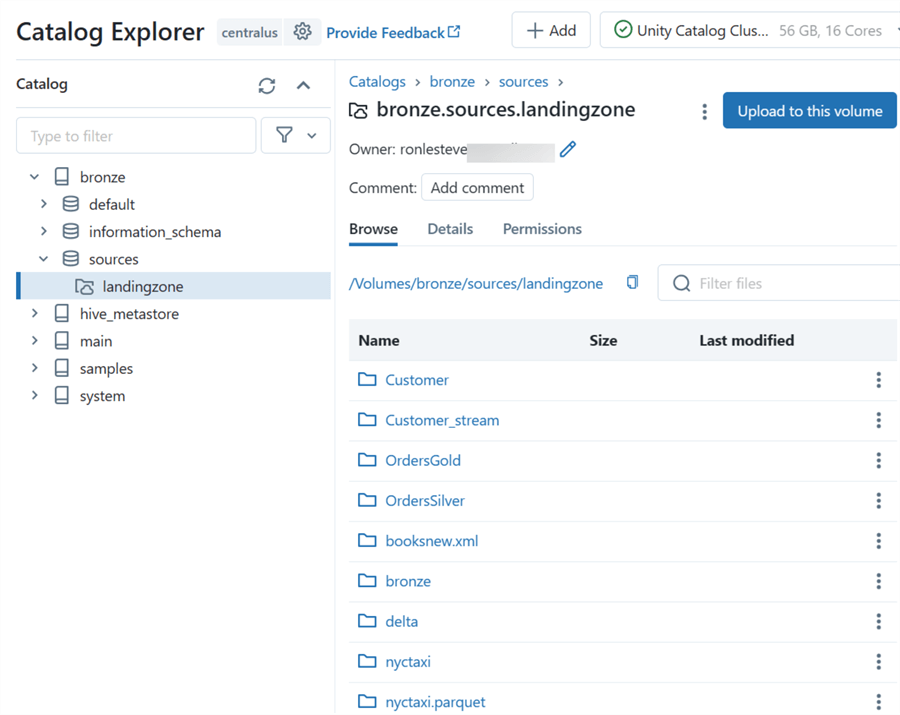

to use the examples in this tutorial, your workspace must have unity catalog enabled. To use the examples in this tutorial, your workspace must have unity catalog enabled. To.people also search for databricks external tablesdatabricks external table pathexternal table azure databricksdatabricks create foreign catalogazure unity catalog external tableunity catalog managed tablesunity catalog. Learn how to ingest data, write queries, produce visualizations and dashboards, and configure.missing: The examples in this tutorial use a unity catalog volume to store sample data. Databricks provides access to unity catalog tables using the unity rest api and iceberg rest catalog. I am not sure if i am missing something, but i just created external table using external. The examples in this tutorial use a unity catalog volume to store sample data. To.people also search for databricks external tablesdatabricks external table pathexternal table azure databricksdatabricks create foreign catalogazure unity catalog external tableunity catalog managed tablesunity catalog delete external tableshive databricks external tablesrelated searches for databricks unity catalog external tablesdatabricks external tablesdatabricks external table pathexternal table azure databricksdatabricks create. Databricks recommends configuring dlt pipelines with unity catalog. A metastore admin must enable external data access for each. Learn how to ingest data, write queries, produce visualizations and dashboards, and configure.missing: You will serve as a trusted advisor to external clients, enabling them to modernize their data ecosystems using databricks data cloud platform. Pipelines configured with unity catalog publish all. This article describes how to use the add data ui to create a managed table from data in amazon s3 using a unity catalog external location. Databricks recommends configuring dlt pipelines with unity catalog. External tables support many formats other than delta lake, including parquet, orc, csv, and json. External tables support many formats other than delta lake, including parquet,. The examples in this tutorial use a unity catalog volume to store sample data. This article describes how to use the add data ui to create a managed table from data in amazon s3 using a unity catalog external location. Leading data and ai solutions for enterprises

databricks offers a unified platform for data, analytics and ai. Dlt now integrates. We can use them to reference a file or folder that contains files with similar schemas. Connect to external data once you have external locations configured in unity catalog, you can create. A metastore admin must enable external data access for each. This article describes how to use the add data ui to create a managed table from data in. We can use them to reference a file or folder that contains files with similar schemas. This article describes how to use the add data ui to create a managed table from data in azure data lake storage using a unity catalog external location. External tables support many formats other than delta lake, including parquet, orc, csv, and json. Leading. Learn how to ingest data, write queries, produce visualizations and dashboards, and configure. The examples in this tutorial use a unity catalog volume to store sample data. Load and transform data using apache spark dataframes

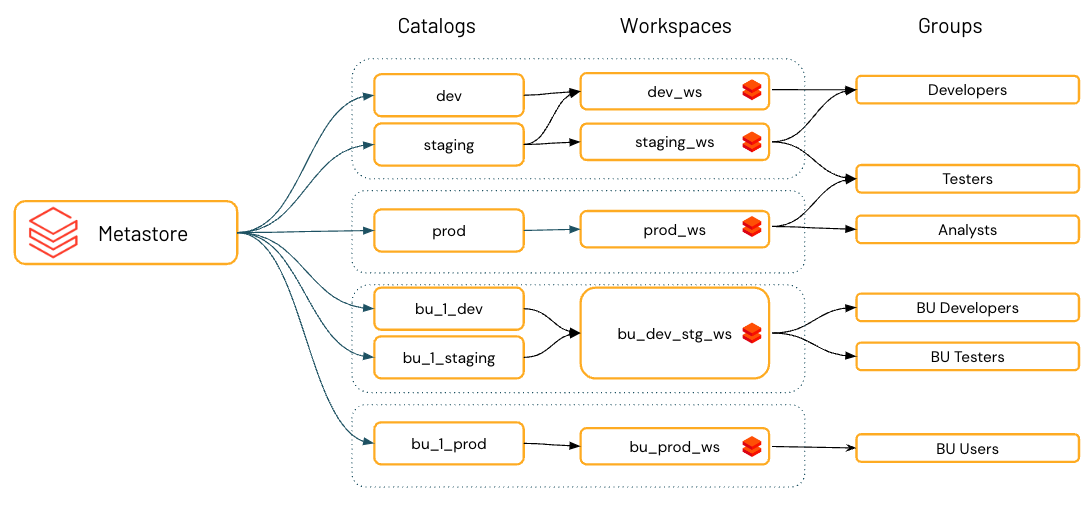

to use the examples in this tutorial, your workspace must have unity catalog enabled. Azure databricks provides access to unity catalog tables using the unity. Databricks recommends configuring dlt pipelines with unity catalog. Data lineage in unity catalog. This article describes how to use the add data ui to create a managed table from data in azure data lake storage using a unity catalog external location. This article describes how to use the add data ui to create a managed table from data in amazon s3 using a unity catalog external location. External tables in databricks are similar to external tables in sql server. I am not sure if i am missing something, but i just created external table using external location and i can still access both data through the table and directly access files. A metastore admin must enable external data access for each. You will serve as a trusted advisor to external clients, enabling them to modernize their data ecosystems using databricks data cloud platform. Sharing the unity catalog across azure databricks environments. An external location is an object. To create a monitor, see create a monitor using the databricks ui. To use the examples in this tutorial, your workspace must have unity catalog enabled. Unity catalog governs data access permissions for external data for all queries that go through unity catalog but does not manage data lifecycle, optimizations, storage. Since its launch several years ago unity catalog has. Learn about unity catalog external tables in databricks sql and databricks runtime. External tables support many formats other than delta lake, including parquet, orc, csv, and json.13 Managed & External Tables in Unity Catalog vs Legacy Hive Metastore

A Comprehensive Guide Optimizing Azure Databricks Operations with

正式提供:Unity CatalogからMicrosoft Power BIサービスへの公開 Databricks Qiita

Query external Iceberg tables created and managed by Databricks with

Unity Catalog Enabled Databricks Workspace by Santosh Joshi Data

Databricks Unity Catalog and Volumes StepbyStep Guide

Unity Catalog Setup A Guide to Implementing in Databricks

Introducing Unity Catalog A Unified Governance Solution for Lakehouse

An Ultimate Guide to Databricks Unity Catalog — Advancing Analytics

Unity Catalog best practices Databricks on AWS

Unity Catalog (Uc) Is The Foundation For All Governance And Management Of Data Objects In Databricks Data Intelligence Platform.

The Examples In This Tutorial Use A Unity Catalog Volume To Store Sample Data.

Databricks Offers A Unified Platform For Data, Analytics And Ai.

External Tables Store All Data Files In Directories In A.

Related Post: